Delta Interactive Voice Assistant

(DIVA)

Company: Delta Air Lines | Date: December 2019

Utilized Natural Language Processing and AI models to assist Flight Attendants with finding items on the airplane and to ease their reporting

Roles: Lead UX/UI Designer, User Researcher

Technology used: Sketch, Invision, Natural Language Processing, AI Assistant

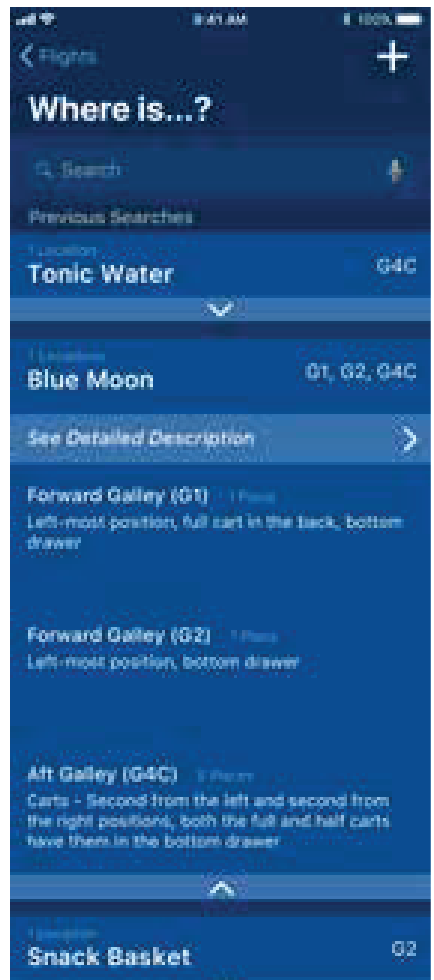

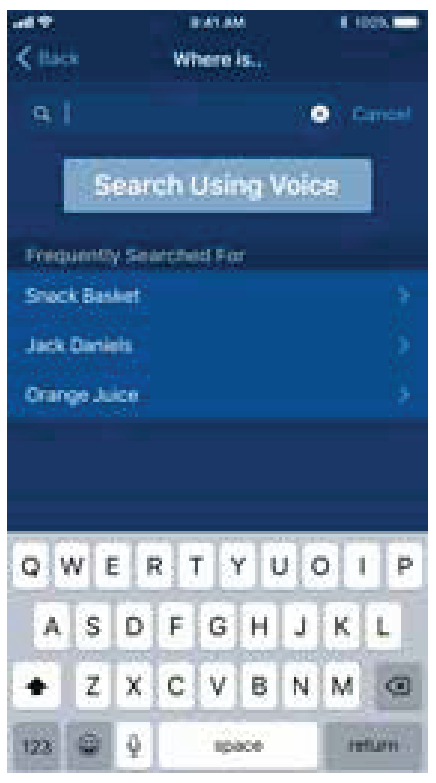

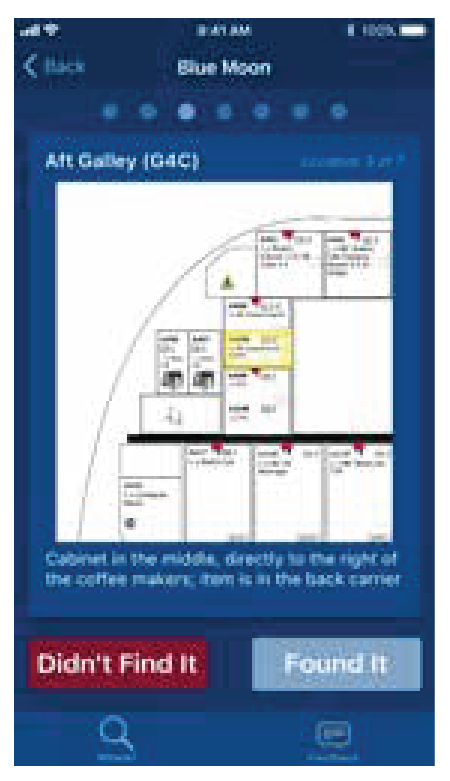

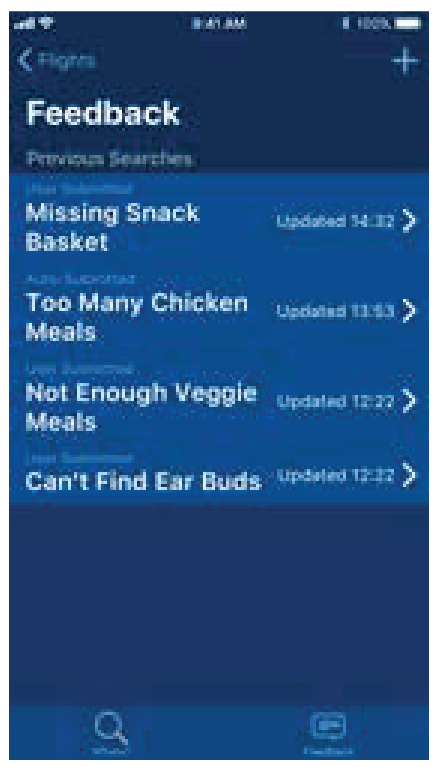

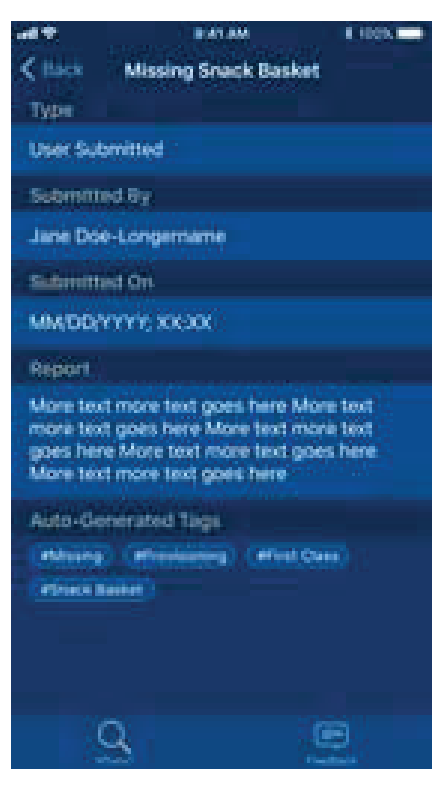

The minimum viable product of the DIVA application had two main purposes -- Finding items in the highly varied galleys of our aircraft and to provide an easier feedback mechanism for the flight attendants.

When the aircraft is loaded, there is a specific plan that is followed on how to load out all of the carts and crates in the galley. Even though there is a specific plan, Delta Air Lines has 21 different models of aircraft and over 50 different internal configurations. Flight attendants are trained to operate every model of aircraft we own but it is incredibly difficult for them to try to remember where the extra milk is on every aircraft.

Something we also found during research on a previous project with our On-Board Services team, is that the feedback mechanism that flight attendats use to report is incredibly difficult to use, unintuitive, and isn’t accurate in capturing the category of the report.

To move more quickly on this initiative, we utilized all of the User Research from our eCabin project, so we jumped right into wireframing.

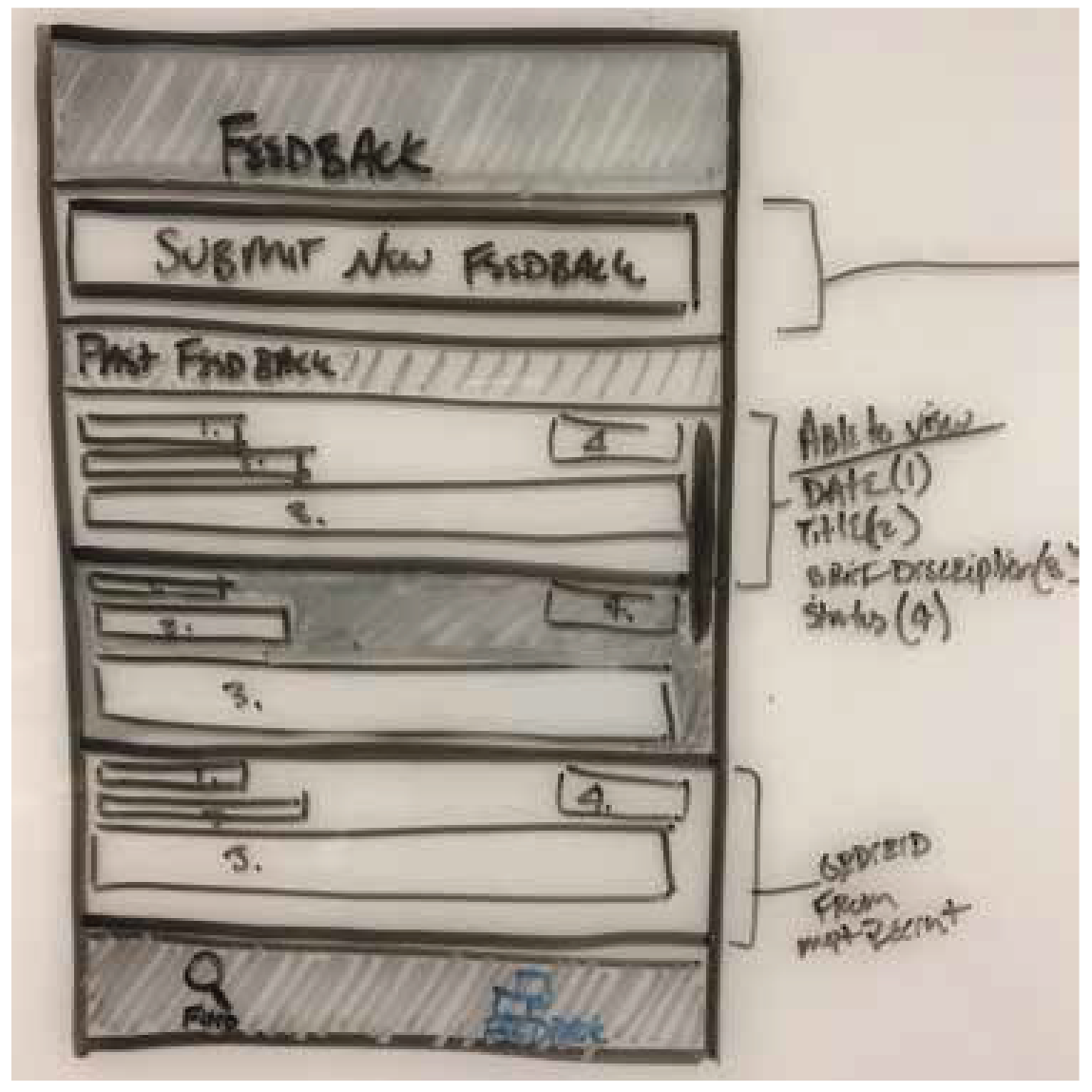

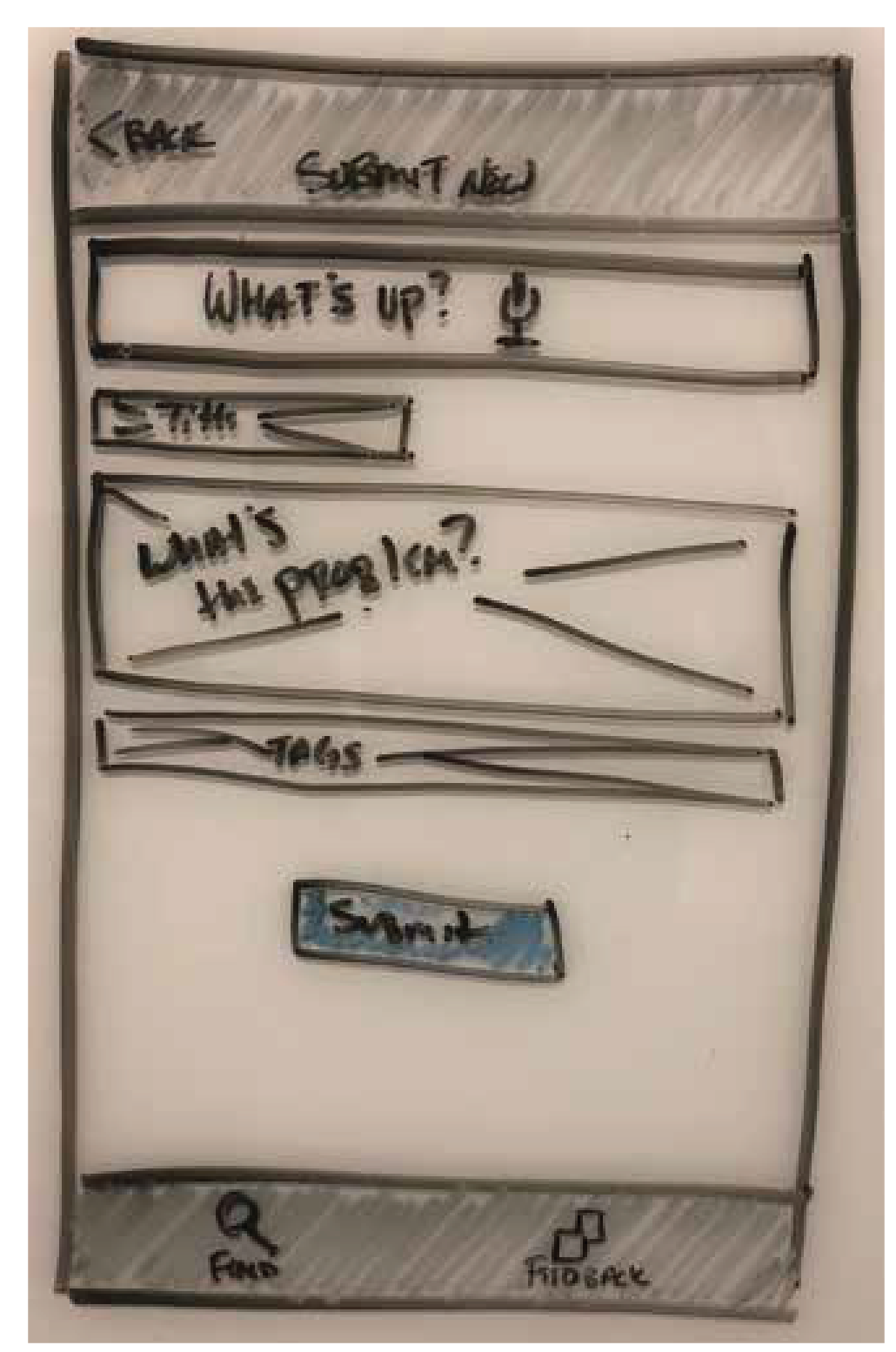

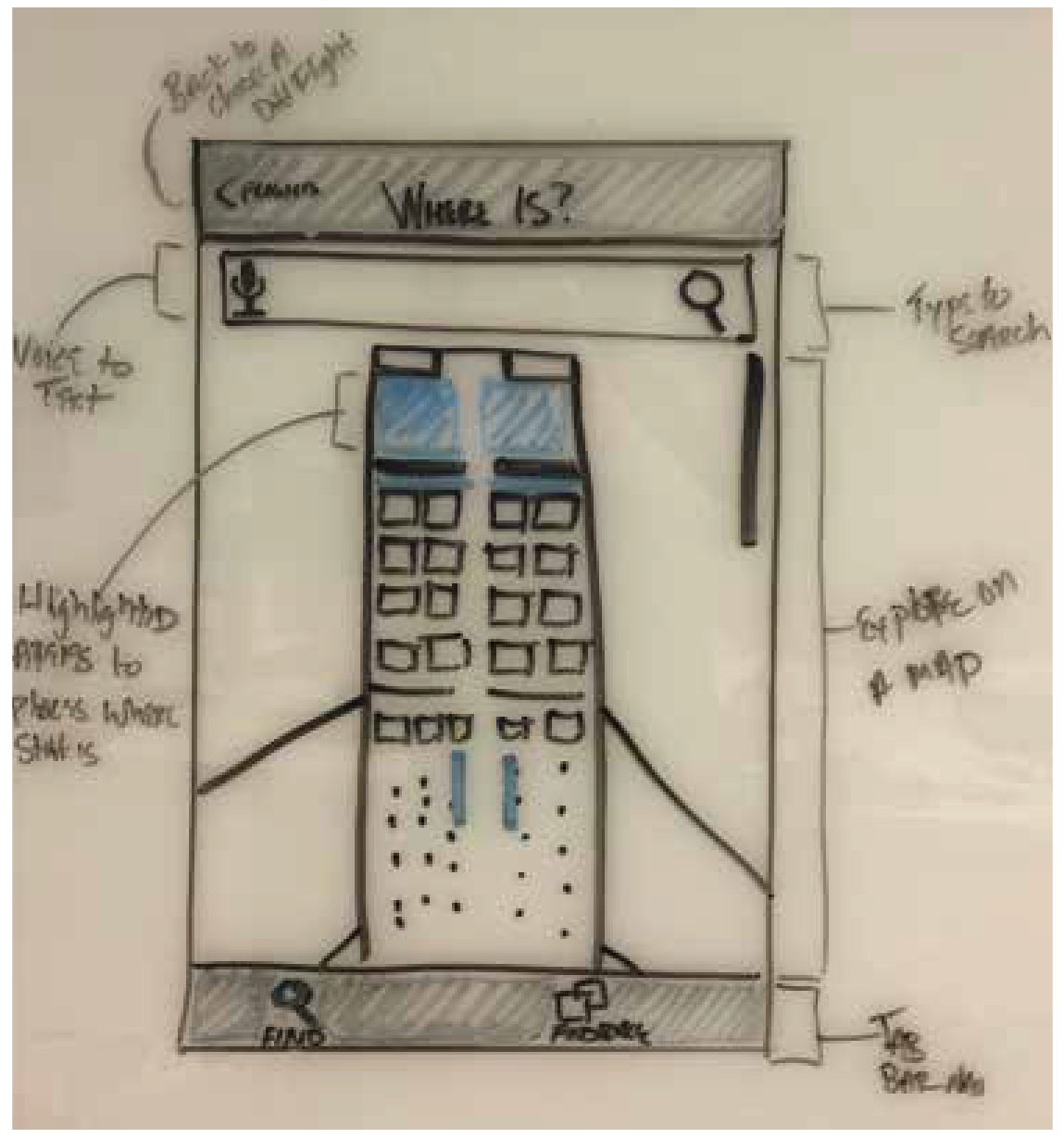

I like to start design work with hand-drawn screens.

Laying out the UX Flow

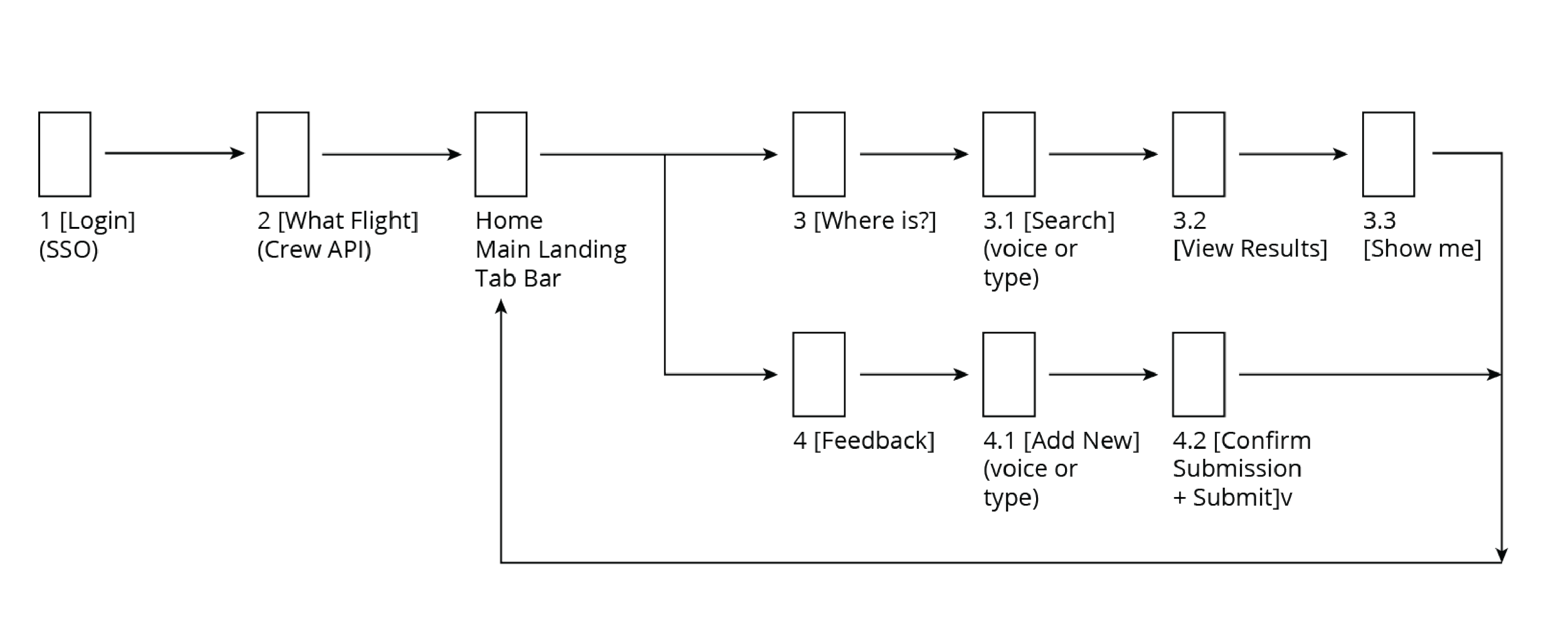

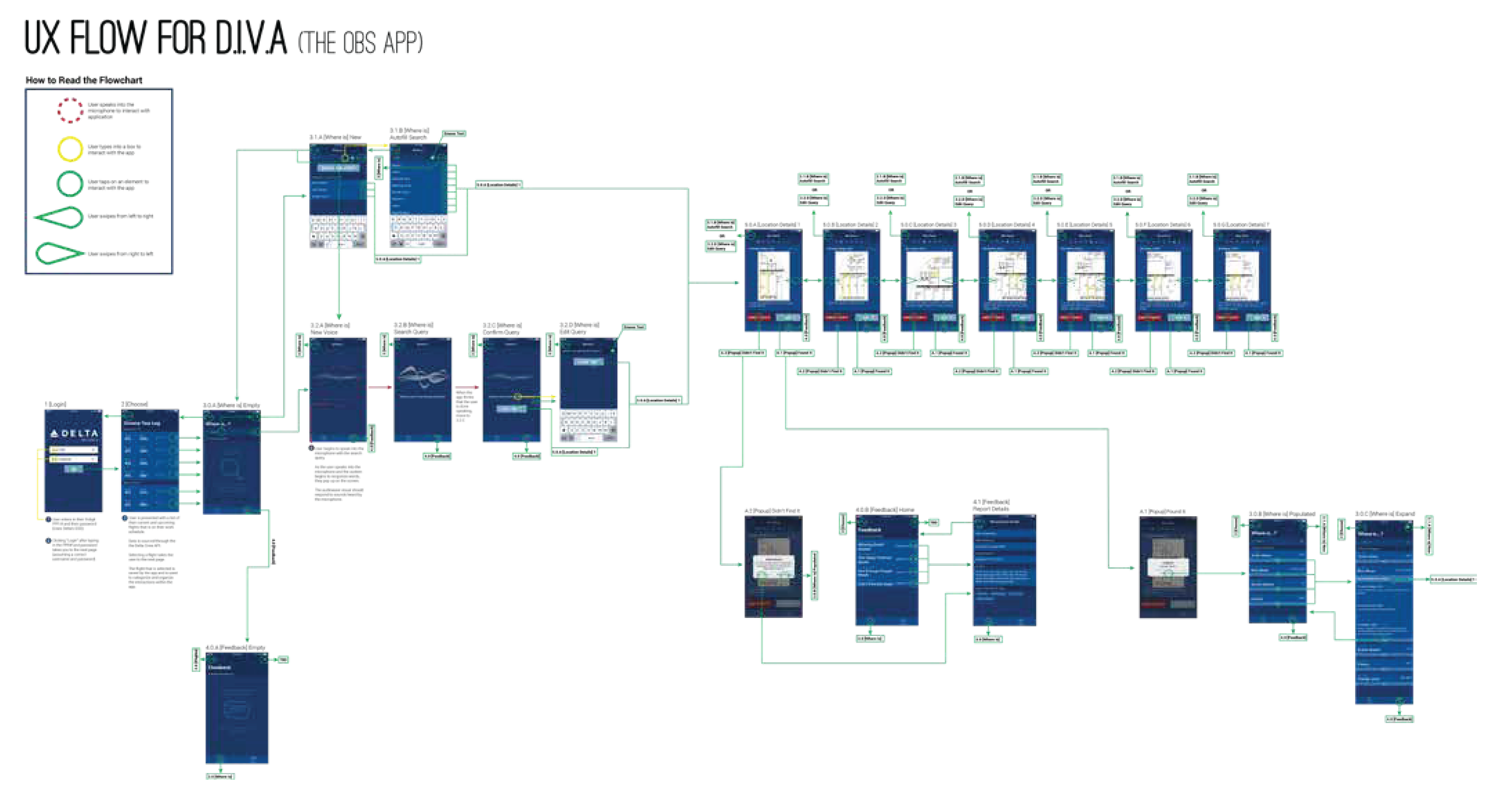

To get a better sense of how a user would move through the application, I like to map out the screens to visualize the flow between them that a user would take.

This application had a major impact, but was relatively simple overall.

To throw together some wireframes quickly to pass off to our developers, I reused the components and style guide I put together from our eCabin project.

Final UX Flow + Interaction Diagram

Once I had some quick wireframes, I created a quick prototype using Invision* and ran several User Tests with my co-workers and Flight Attendants to gather feedback.

After I validated the user flow, feature set, and overall design with our users, I put together a UX flow and interaction diagram to pass off to our developer to put together the final MVP.

*RIP Invision 🪦

Outcomes

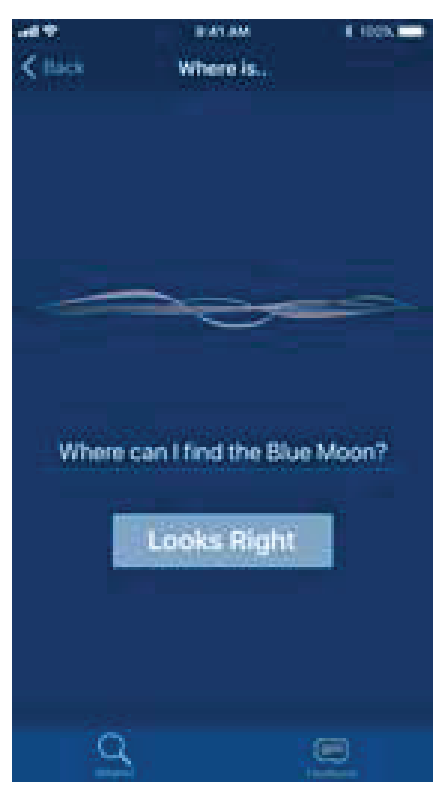

Additionally during our user testing, our team identified the potential biggest drawback to an application that uses Natural Language Processing while in the air would be the overall noise level in the airplane. We recgonized that this could impact the accuracy of the natural language processing.

My team and I took 11 test flights across all varieties and ages of aircraft to test our MVP application. Throughout these flights, we tested “The Rainbow Passage” (which is used in speech science as the passage that contains all the phonemes in the English Language) in all locations within the aircraft. We also tested with several phrases in Spanish.

This test was incredibly successful, with our app out performing other digital assistants (e.g. Google, Siri) by 26%.*

*This test was conducted in late 2019 and this test result reflects the accuracy and precision of digital assistants at that time.

We presented our MVP application and our findings from our user research in late 2019-early 2020.

Unfortunately, I left Delta Air Lines in March 2020 and was not able to see this project to complete implementation.

Ultimately, I think the project was stalled indefinitely due to the COVID-19 pandemic